Enterprise System Administration and IaaS Datacenter Automation with openQRM 5.2.x

Document Version: 01122014 - Released: September 18, 2015

IaaS Cloud Computing and Datacenter Management framework for professional users.

openQRM Enterprise provides you with a complete Automated Workflow Engine for all your Bare-Metal and VM deployment AND all of your IT subsystems.

Gain higher efficiency through management, automation and monitoring of your Datacenter & Cloud capacities.

openQRM Enterprise Administrator Guide

openQRM base architecture - in short

Recommendation for selecting the operating system for the openQRM Server:

Recommendation for the first Steps after the initial installation

Local deployment versus network deployment

Examples for network-deployment

Local deployment automatic installation and disk cloning

Recipe: Local VM deployment with Citrix (localboot)

Recipe: Local VM deployment with KVM (localboot)

Cloning/snapshotting the KVM VM image

Recipe: Local VM deployment with Libvirt (localboot)

Cloning/snapshotting the Libvirt VM image

Clones and snapshots can be efficiently used for system deployments

Recipe: Local VM Deployment with LXC (localboot)

Cloning/snapshotting the LXC VM image

Clones and snapshots can be efficiently used for system deployments

Recipe: Local VM deployment with openVZ (localboot)

Cloning/snapshotting the openVZ VM image

Clones and snapshots can be efficiently used for system deployments

Recipe: Local VM deployment with Xen (localboot)

Cloning/snapshotting the Xen VM image

Recipe: Local VM deployment with VMware ESXi (localboot)

Recipe: Integrating existing, local-installed systems with Local-Server

Recipe: Integrating existing, local-installed VM with Local-Server

Recipe: Local deployment with Cobbler (automatic Linux installations)

Recipe: Local deployment with FAI (automatic Linux installations)

Network Deployment - DHCPD/TFTPD/Network-Storage

Recipe: Network VM deployment with Citrix (network boot)

Recipe: Network VM deployment with KVM (network boot)

Recipe: Network VM deployment with Libvirt (network boot)

Recipe: Network VM deployment with Xen (network boot)

Recipe: Network VM deployment with VMware ESXi (network boot)

Recipe: Network Deployment with AOE-Storage

Recipe: Network deployment with iSCSI-Storage

Recipe: Network deployment with NFS-Storage

Recipe: Network deployment with LVM-Storage

Recipe: Network deployment with SAN-Boot-Storage

Recipe: Network Deployment with TmpFs-Storage

Recipe: Populating network deployment images via the Image-Shelf

Recipe: Creating new kernel for network deployment

High availability for services (application level) - LCMC

High availability for openQRM Server

Automated IP assignment for the openQRM network with DHCPD

Automated DNS management for the openQRM network with DNS

Automated application deployment with Puppet

Automated monitoring with Nagios and Icinga

Custom Server service check configuration

Automated monitoring with Zabbix

Automated monitoring with Collectd

Automated power management with WOL (wake-up-on-lan)

Accessing remote desktops and VM consoles with NoVNC

Web SSH-Access to remote systems with SshTerm

LVM Device and Volume Group Configuration

Network-device and Bridge Configuration

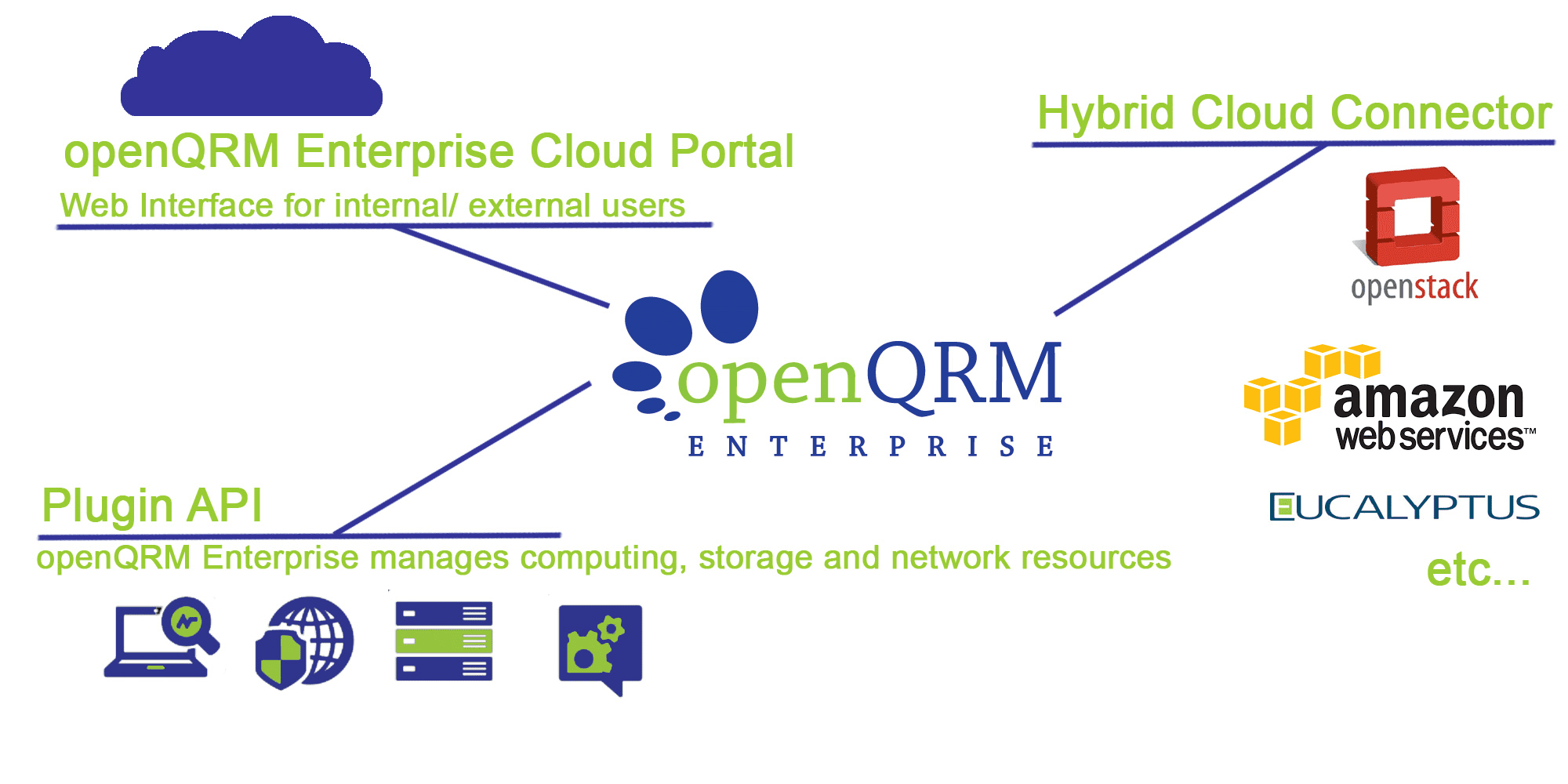

Public and Private Cloud Computing

Hybrid Cloud Computing - Migrating services between Public and Private Clouds

Manage and automate public and private clouds

Import - import AMIs from a public or private Cloud

Automated application deployment

openQRM Enterprise - Enterprise Edition Features

Cloud-Zones: Managing openQRM Clouds in multiple IT Locations

Cloud integration with E-Commerce systems via Cloud-Shop

Centralized User-Management with LDAP

Automated IP/Network/VLAN management with IP-Mgmt

Automated IT-Documentation with I-do-it

Support for the Windows Operating System

Install the openQRM Client for Windows

Automated power-management with IPMI

Network card bonding for network deployment

Automated event mailer to forward critical events in openQRM

Secure, remote access for the openQRM Enterprise support team

Debugging openQRM actions and commands

Explanation of the openQRM Cloud configuration parameters:

A Server (previously named Appliance in openQRM Version < 5.2) in openQRM represents a service e.g. a Web Application Server running on a some kind of operating system on a specific type of a Virtual Machine, on a specific host with a specific complete pre-configuration.

Master Object: server

Server Sub Objects: kernel, Image, resource and SLA definition.

Other Objects: storage, event

1. A server is created from the sub components.

2. An Image object depends on a Storage object (an Image is always located on a Storage).

3. The Resource of a Server can be "exchanged"

When a Server is created/started/stopped/removed openQRM triggers a server hook which can be implemented by plugins. By this server hook the openQRM server transfers the responsibility to do specific actions designed by the plugin.

Hooks are also available for other openQRM objects such as resources and events. Please check the development plugin for a detailed openQRM Hooks documentation.

The deployment and management functionalities of openQRM are independent from the operating system of the openQRM server itself e.g. openQRM running on a Debian system can of course deploy and manage CentOS/RH based systems.

While openQRM is designed to run and work on all kinds of different Linux distributions we still recommend the following operating system for the openQRM Server:

Debian, Ubuntu: fully supported, all functionalities works out-of-the-box

CentOS, Redhat Enterprise, Suse: full supported, some functionalities may need additional post-configuration.

Install the following packages:

make, subversion Checkout openQRM (or obtain the openQRM Enterprise Edition):

svn checkout http: //svn.code.sf.net/p/openqrm/code/trunk openqrm

Change to the trunk/openqrm/src/ directory:

cd openqrm/src/

To build openQRM run “make”

make

To install openQRM in /usr/share/openqrm now run “make install”

make install

By default the next step will initialize openQRM server with standard HTTP Protocol.

If you would like to initialize openQRM server with HTTPS please edit:

vi /usr/share/openqrm/etc/openqrm-server.conf

and adjust:

OPENQRM_WEB_PROTOCOL="http"

to

OPENQRM_WEB_PROTOCOL="https"

To start (and initialize openQRM at first startup) run “make start”

make start

To stop openQRM and all its plugin services run “make stop”

make stop

To update a running openQRM server please run “svn up && make update”

svn up && make update

openQRM version =< 5.0: Please notice that after make update you need to re-configure the plugin boot-services!

Hint: For Redhat-based Linux Distributions (e.g. CentOS) SELinux and the iptables firewall must be disabled before the make start action!

Hint: openQRM supports a Mysql or Postgres database backend. If Postgres is used please make sure to have the php-pgsql PHP Apache module package installed.

Update /usr/share/openqrm/plugins/dns/etc/openqrm-plugin-dns.conf and set the OPENQRM_SERVER_DOMAIN parameter to a domain name for your openQRM network.

By default it is set to:

OPENQRM_SERVER_DOMAIN="oqnet.org"

To configure this parameter please use the Configure Action in the Plugin-Manager.

Then enable and start the DNS plugin via the plugin manager. This will full automatically pre-configure and start a Bind DNS server on openQRM for the configured domain.

The DNS Plugin now serves the hostname resolving on the openQRM management network and fully automatically adds/removes Server names and their resources ip addresses to the DNS zones.

As a second step please enable the DHCPD plugin. Enabling the DHCPD plugin will automatically create a dhcpd configuration at:

/usr/share/openqrm/plugins/dhcpd/etc/dhcpd.conf

By default the pre-configuration provides the full range of IP address from your openQRM management network. You may want to take a look at the automatic generated configuration and adjust it to your needs.

Now start the DHCPD plugin and it will automatically provide IP addresses from your management network for every new system (resource) in openQRM.

If you decide for network deployment please also enable and start the TFTPD plugin.

To get more information about what the difference is between local deployment and network deployment in openQRM, please continue with the next chapter about Local deployment versus network deployment.

Using any kind of local-deployment method in openQRM results in a system with its operating system deployed to its local disk.

For physical systems this is normally one (or more) physical harddisks, for virtual machines the local disk can be any type of storage attached to the virtualization host running the VM (local disk, iSCSI, SAN, NAS, NFS, a distributed and/or clustered storage etc.).

It is recommended to use one or more remote high available network storage systems to host the storage space for the virtualization hosts.

- Automatic installation (or disk-cloning) to the hard disk of a physical server (e.g. FAI, LinuxCOE, Cobbler, Opsi, Clonezilla) .

- Automatic installation (or disk-cloning) to a virtual hard disk of a virtual machine (e.g. FAI, LinuxCOE, Cobbler, Opsi, Clonezilla in combination with Citrix (localboot), KVM (localboot), Xen (localboot)).

- Image-based provisioning of a virtual hard disk of a virtual machine (clone/snap)(e.g. Citrix (localboot), KVM (localboot), Libvirt (localboot), Xen (localboot), openVZ (localboot), LXC (localboot)).

Using any kind of network-deployment in openQRM results in a system with its operating system directly located on and deployed from a remote storage system. That means those systems won't use any kind of local disk but a remote network storage for its root-filesystem.

- Image-based provisioning of a physical systems (clone/snap) (e.g. a physical server in combination with any kind of network-storage plugin such as AOE-Storage, iSCSI-Storage, NFS-Storage, LVM-Storage and TMPFS-Storage).

- Image-based provisioning of a virtual machine (clone/snap) (e.g. Citrix (network boot), KVM (network boot), Libvirt (network boot) Xen (network boot), VMware (network boot) in combination with any kind of network-storage plugin such as AOE-Storage, iSCSI-Storage, NFS-Storage, LVM-Storage and TMPFS-Storage).

1. Install and setup openQRM on a physical system or on a virtual machine

2. Enable the following plugins: dns (optional), dhcpd (optional), citrix, tftpd, local-server, novnc

3. Configure the citrix boot-service

Please check the Citrix help section how to use the Plugin-Manager "configure" option or the openqrm utility to apply a custom boot-service configuration.

Adapt the following configuration parameters according to your virtualization host bridge configuration

CITRIX_MANAGEMENT_INTERFACE

CITRIX_EXTERNAL_INTERFACE

In case Citrix (localboot) virtual machines should be deployed via the openQRM Cloud please also configure:

CITRIX_DEFAULT_SR_UUID

CITRIX_DEFAULT_VM_TEMPLATE

4. Select a separate physical server as the Citrix virtualization host (needs VT/Virtualization Support available and enabled in the system BIOS).

Install Citrix XenServer on the physical system dedicated as the Citrix virtualization host

Hint: Enable SSH login on the Citrix XenServer

The openQRM NoVNC plugin provides access to the Citrix VM console (requires SSH Login enabled on the Citrix XenServer configuration)

5. Copy the Citrix “xe" command line-utility to the openQRM system at /usr/bin xe

6. Auto discover the Citrix XenServer system via the Citrix autodiscovery. The integration via the auto discovery automatically creates a Citrix virtualization host Server.

7. Create a Citrix storage (storage object for the virtual machine images).

- Use the Storage Create Wizard to create a new storage object using the same resource as selected for the Citrix Server and set the deployment type to citrix-deployment

Hint: Citrix XenServer virtual machines are stored on a Citrix SR (storage resource).

- You can use the "nfs-storage" and/or the "iscsi-storage" plugin to easily create a NAS- and/or iSCSI datastore to be used as a Citrix storage resource!

- Then use the included SR datastore manager to connect a NAS- and/or iSCSI Datastore.

1. Create a Citrix (localboot) virtual machine.

- Use the Server Create wizard to create a new Server object.

- Create a New Citrix (localboot) VM resource in the 2. step.

- Select the previously created Citrix Server object for creating the new Citrix (localboot) VM.

- Create a New Citrix VM image in the 3. step

- Select the previously created Citrix storage object for creating the new Citrix VM image volume.

Creating a new volume automatically creates a new image object in openQRM.

Please notice: For an initial installation of the image of the virtual machine you may want to edit the image details to attach an automatic operating system installation on first start up.

Hint: Easily create an automatic installation profiles which can be attached via openQRM Install-from-Template mechanism to a Citrix VM image object via the following plugins:

Cobbler, FAI, LinuxCOE, Opsi, Clonezilla

- Start the Server

Starting the Server automatically combines the Citrix (localboot) VM (Resource Object) and the Citrix VM volume (image object).

Stopping the Server will uncouple resource and image.

Hint: To enable further management functionalities of openQRM within the virtual machine's operating system please install the openQRM-local-vm-client in the VM. Please refer to Integrating existing, local-installed VM with Local-Server.

Through the integrated Citrix volume management existing, pre-installed images can be duplicated with the Clone mechanism. Clones can be efficiently used for system deployments.

Clone

Cloning a volume of a Citrix VM image object results in a 1-to-1 copy of the source volume. A new image object for this volume copy is automatically created in openQRM. Creating a clone depends on the actual volume size, hardware and network performance, etc. All data from the origin volume are transferred/copied to the source volume.

Install and setup openQRM on a physical system or on a virtual machine

1. Enable the following plugins: dns (optional), dhcpd (optional), tftpd, local-server, novnc, device-manager, network- manager, kvm

2. Configure the KVM boot-service

Please check the KVM help section how to use the Plugin-Manager "configure" option or the openQRM utility to apply a custom boot-service configuration.

Adapt the following configuration parameters according to your virtualization host bridge configuration. This network-configuration is only used by the openQRM Cloud to auto-create VMs.

OPENQRM_PLUGIN_KVM_BRIDGE_NET1

OPENQRM_PLUGIN_KVM_BRIDGE_NET2

OPENQRM_PLUGIN_KVM_BRIDGE_NET3

OPENQRM_PLUGIN_KVM_BRIDGE_NET4

OPENQRM_PLUGIN_KVM_BRIDGE_NET5

In case kvm-bf-deployment (blockfile deployment) is used also: OPENQRM_PLUGIN_KVM_FILE_BACKEND_DIRECTORIES

3. Select a physical server as the virtualization host (needs VT/virtualization support available and enabled in the system BIOS)

Please notice: This system dedicated to be the virtualization host can be the openQRM server system itself or it can be a remote physical server integrated via the Local-Server plugin.

Please check Integrating existing, local-installed systems with Local-Server.

Please make sure this system meets the following requirements:

- Enable automatic packages installation capabilities so that it can fetch package dependencies. All eventual required package dependencies are resolved automatically during the first action running!

- For KVM LVM storage: One (or more) LVM volume group(s) with free space dedicated for the KVM VM storage.

- For KVM blockfile storage: free space dedicated for the KVM VM storage , eventually using remote NAS/NFS storage space.

- One or more bridges configured for the virtual machines (e.g. br0, br1, etc.).

1. Create a KVM Server (Server object for the virtualization host).

In case the openQRM server is the physical system dedicated to be the virtualization host, please use the Server Create wizard to create a new Server object using the openQRM server resource.

2. After creating the Server edit it and set Virtualization to KVM Host

In case a remote physical system, integrated via the Local-Server Plugin, is the virtualization host, the system integration already created an Server for this system. Simply edit it and set Virtualization to KVM Host.

3. Create a KVM storage (storage object for the virtual machine images).

Use the Storage Create wizard to create a new storage object using the same resource as selected for the KVM Server and set the deployment type to either kvm-lvm-deployment.

For KVM-lvm-deployment the image volumes are created as LVM logical volumes on available LVM volume groups on the storage system.

For KVM-bf-deployment the image volumes are created as block files within configurable directories

Please check /usr/share/openqrm/plugins/kvm/etc/openqrm-plugin-kvm.conf for the configuration options

4. Create a KVM (localboot) virtual machine

- Use the Server Created wizard to create a new Server object - Create a new KVM (localboot)

VM resource in the 2. step

- Select the previously created KVM Server object for creating the new KVM (localboot) VM.

Please notice: For an initial installation of the image of the virtual machine you may want to configure the VM to boot from a prepared ISO image to start a manual or automatic operating system installation on first start up.

Hint: Easily create an automatic installation ISO image with the LinuxCOE plugin!

The automatic installation ISO images of LinuxCOE will be automatically available to be selected on the virtualization host Server at /linuxcoe-iso

- Create a new KVM VM image in the 3. steps

- Select the previously created KVM storage object for creating the new KVM VM image volume.

Creating a new volume automatically creates a new image object in openQRM.

Please notice: For an initial installation of the image of the virtual machine you may want to edit the image details to attach an automatic operating system Installation on first start up.

Hint: Easily create an automatic installation profile which can be attached via openQRM Install-from-Template mechanism to a KVM VM image object via the following plugins: Cobbler, FAI, LinuxCOE, Opsi, Clonezilla

- Start the Server

Starting the Server automatically combines the KVM (localboot) VM (resource object) and the KVM VM volume (image object).

Stopping the Server will uncouple resource and image.

Hint: After the operating system installation on the KVM VM volume the VM is normally still set to boot from the ISO Image. To reconfigure the VM to directly boot from its local installed virtual disk follow the steps below:

Stop the Server

Update the virtual machine via the VM manager

Start the Server again

To enable further management functionalities of openQRM within the virtual machine's operating system please install the openQRM-local-vm-client in the VM. Please refer to integrating existing, local-installed VM with Local-Server.

Through the integrated KVM volume management existing, pre-installed images can be duplicated with the Clone and Snapshot mechanism.

Clones and snapshots can be efficiently used for system deployments.

Clone

Cloning a volume of a KVM VM image object results in a 1-to-1 copy of the source volume. A new image object for this volume copy is automatically created in openQRM.

Creating a clone depends on the actual volume size, hardware and network performance, etc. All data from the origin volume are transferred/copied to the source volume.

Snapshot

In case of kvm-lvm-deployment the underlying storage layer (LVM) provides the snapshot-functionality.

A snapshot results in a 1-to-1 copy-on-write (COW) volume which redirects all read calls to the origin and its write calls to the preserved storage space for the snapshot.

Creating a snapshot just takes a second. It does not involve data transfer nor does it use any actual storage space on the storage.

That means a snapshot only saves changes to the origin and is a great way to efficiently save storage space for deployments.

Howto

Virtualization with KVM and openQRM 5.2 on Debian Wheezy at http://www.openqrm-enterprise.com/resources/documentation-howtos/howtos/virtualization-with-kvm-and-openqrm-51-on-debian-wheezy. html

1. Install and setup openQRM on a physical system or on a virtual machine

2. Enable the following plugins: dns (optional), dhcpd (optional), tftpd, local-server, novnc, libvirt

3. Configure the Libvirt boot-service

Please check the Libvirt help section how to use the Plugin-Manager "configure" option or the openQRM utility to apply a custom boot-service configuration.

Adapt the following configuration parameters according to your virtualization host bridge configuration. This network-configuration is only used by the openQRM Cloud to auto-create VMs.

OPENQRM_PLUGIN_LIBVIRT_BRIDGE_NET1 OPENQRM_PLUGIN_LIBVIRT_BRIDGE_NET2 OPENQRM_PLUGIN_LIBVIRT_BRIDGE_NET3 OPENQRM_PLUGIN_LIBVIRT_BRIDGE_NET4 OPENQRM_PLUGIN_LIBVIRT_BRIDGE_NET5 OPENQRM_PLUGIN_LIBVIRT_PRIMARY_NIC_TYPE OPENQRM_PLUGIN_LIBVIRT_ADDITIONAL_NIC_TYPE OPENQRM_PLUGIN_LIBVIRT_VM_DEFAULT_VNCPASSWORD

4. Select a physical server as the virtualization host (needs VT/virtualization support available and enabled in the system BIOS)

Please notice: This system dedicated to be the virtualization host can be the openQRM server system itself or it can be a remote physical server integrated via the Local-Server plugin.

Please check integrating existing, local-installed systems with Local-Server.

Please make sure this system meets the following requirements:

Enable automatic packages installation capabilities so that it can fetch package dependencies. All eventual required package dependencies are resolved automatically during the first action running!

5. Create a Libvirt Server (Server object for the virtualization host). In case the openQRM server is the physical system dedicated to be the virtualization host please use the Server Create wizard to create a new Server object using the openQRM server resource.

6. After creating the Server edit it and set Virtualization to Libvirt Host.

In case a remote physical system, integrated via the Local-Server Plugin, is the virtualization host the system integration already created an Server for this system. Simply edit it and set Virtualization to Libvirt Host

7. A Libvirt storage (storage object for the virtual machine images) is automatically created when updating the Libvirt Server to a Libvirt Host.

8. Create a Libvirt (localboot) virtual machine.

- Use the Server Create wizard to create a new Server object .

- Create a new Libvirt (localboot) VM resource in the 2. step.

- Select the previously created Libvirt Server object for creating the new Libvirt (localboot) VM.

Please notice: For an initial installation of the image of the virtual machine you may want to configure the VM to boot from a prepared ISO image to start a manual or automatic operating system installation on first start up.

Hint: Easily create an automatic installation ISO image with the LinuxCOE plugin! The automatic installation ISO images of LinuxCOE will be automatically available to be selected on the virtualization host Server at /linuxcoe-iso .

- Create a new Libvirt VM image in the 3. step.

- Select the previously created Libvirt storage object for creating the new Libvirt VM image volume.

Creating a new volume automatically creates a new image object in openQRM.

Please notice: For an initial installation of the image of the virtual machine you may want to edit the image details to attach an automatic operating system Installation on first start up.

Hint: Easily create an automatic installation profiles which can be attached via openQRM Install-from-Template mechanism to a Libvirt VM image object via the following plugins: Cobbler, FAI, LinuxCOE, Opsi, Clonezilla

- Start the Server

Starting the Server automatically combines the Libvirt (localboot) VM (resource object) and the Libvirt VM volume (image object).

Stopping the Server will uncouple resource and image.

Hint: After the operating system installation on the Libvirt VM volume the VM is normally still set to boot from the ISO Image. To reconfigure the VM to directly boot from its local installed virtual disk follow the steps below:

Stop the Server

Update the virtual machine via the VM manager

Start the Server again

To enable further management functionalities of openQRM within the virtual machine's operating system please install the openqrm-local-vm-client in the VM.

Please refer to integrating existing, local-installed VM with Local-Server.

Through the integrated Libvirt volume management existing, pre-installed images can be duplicated with the Clone and Snapshot mechanism.

Clone

Cloning a volume of a Libvirt VM image object results in a 1-to-1 copy of the source volume. A new image object for this volume copy is automatically created in openQRM. Creating a clone depends on the actual volume size, hardware and network performance, etc. All data from the origin volume are transferred/copied to the source volume.

Snapshot

In case of libvirt-lvm-deployment the underlying storage layer (LVM) provides the snapshot-functionality. A snapshot results in a 1-to-1 copy-on-write (COW) volume which redirects all read calls to the origin and its write calls to the preserved storage space for the snapshot. Creating a snapshot just takes a second. It does not involve data transfer nor does it use any actual storage space on the storage. That means a snapshot only saves changes to the origin and is a great way to effiiciently save storage space for deployments.

1. Install and setup openQRM on a physical system or on a virtual machine

2. Enable the following plugins: dns (optional), dhcpd (optional), local-server, device-manager, network-manager, lxc

3. Configure the LXC boot-service

Please check the LXC help section how to use the Plugin-Manager "configure" option or the openQRM utility to apply a custom boot-service configuration

Adapt the following configuration parameters according to your virtualization host bridge configuration. This network-configuration is only used by the openQRM Cloud to auto-create VMs.

OPENQRM_PLUGIN_LXC_BRIDGE

OPENQRM_PLUGIN_LXC_BRIDGE_NET1

OPENQRM_PLUGIN_LXC_BRIDGE_NET2

OPENQRM_PLUGIN_LXC_BRIDGE_NET3

OPENQRM_PLUGIN_LXC_BRIDGE_NET4

4. Select a physical server as the Virtualization Host

Please notice: This system dedicated to be the virtualization host can be the openQRM server system itself or it can be a remote physical server integrated via the Local-Server Plugin.

Please check integrating existing, local-installed Systems with Local-Server

Please make sure this system meets the following requirements:

- Enable automatic packages installation capabilities so that it can fetch package dependencies. All eventual required package dependencies are resolved automatically during the rst action running!

- For LXC LVM storage: One (or more) lvm volume group(s) with free space dedicated for the LXC VM storage

- One or more bridges configured for the virtual machines (e.g. br0, br1, etc.)

1. Create an LXC Server (Server object for the virtualization host).

In case the openQRM server is the physical system dedicated to be the virtualization host please use the Server Create wizard to create a new Server object using the openQRM server resource.

2. After creating the Server edit it and set Virtualization to OpenVZ Host.

In case a remote physical system, integrated via the Local-Server-plugin, is the virtualization host the system integration already created an Server for this system. Simply edit it and set Virtualization to LXC Host.

3. Create an LXC storage (storage object for the virtual machine images).

- Use the Storage Create wizard to create a new storage object using the same resource as selected for the LXC Server and set the deployment type to lxc-deployment

- The image volumes are created as LVM logical volumes on available LVM volume groups on the storage system .

1. Create an LXC (localboot) virtual machine.

- Use the Server Create wizard to create a new Server object.

- Create a New LXC (localboot) VM resource in the 2. step

Select the previously created LXC Server object for creating the new LXC (localboot) VM.

- Create a new LXC VM image in the 3. step

Select the previously created LXC storage object for creating the new LXC VM image volume.

Creating a new volume automatically creates a new image object in openQRM

Hint: For an initial installation of the image of the virtual machine the LXC volume manager provides an easy way to upload ready-made LXC operating system templates which can be then directly deployed to the LXC volumes.

Please check: http://wiki.openvz.org/Download/template/precreated

Start the Server

Starting the Server automatically combines the LXC (localboot) VM (resource object) and the LXC VM volume (image object).

Stopping the Server will uncouple resource and image.

To enable further management functionalities of openQRM within the virtual machine's operating system please install the openqrm-local-vm-client in the VM.

Please refer to Integrating existing, local-installed VM with Local-Server.

Through the integrated LXC volume management existing, pre-installed images can be duplicated with the Clone and Snapshot mechanism.

Clone

Cloning a volume of a LXC VM image object results in a 1-to-1 copy of the source volume. A new image object for this volume copy is automatically created in openQRM. Creating a clone depends on the actual volume size, hardware and network performance, etc. All data from the origin volume are transferred/copied to the source volume.

Snapshot

In the case of lxc-lvm-deployment, the underlying storage layer (LVM) provides the snapshot-functionality. A snapshot results in a 1-to-1 copy-on-write (COW) volume which redirects all read calls to the origin and its write calls to the preserved storage space for the snapshot.

Creating a snapshot just takes a second. It does not involve data transfer nor does it use any actual storage space on the storage. That means a snapshot only saves changes to the origin and is a great way to efficiently save storage space for deployments.

Install and setup openQRM on a physical system or on a virtual machine

1. Enable the following plugins: dns (optional), dhcpd (optional), local-server, device-manager, network-manager, openvz

2. Configure the openVZ boot-service

Please check the openVZ help section how to use the Plugin-Manager "configure" option or the openQRM utility to apply a custom boot-service configuration

Adapt the following configuration parameters according to your virtualization host bridge configuration. This network-configuration is only used by the openQRM Cloud to auto-create VMs.

OPENQRM_PLUGIN_OPENVZ_BRIDGE

OPENQRM_PLUGIN_OPENVZ_BRIDGE_NET1 OPENQRM_PLUGIN_OPENVZ_BRIDGE_NET2 OPENQRM_PLUGIN_OPENVZ_BRIDGE_NET3 OPENQRM_PLUGIN_OPENVZ_BRIDGE_NET4

1. Select a physical server as the virtualization host

Please notice: This System dedicated to be the virtualization host can be the openQRM server system itself or it can be a remote physical server integrated via the Local-Server Plugin.

Please check integrating existing, local-installed Systems with Local-Server. Make sure this system meets the following requirements:

- Enable automatic packages installation capabilities so that it can fetch package dependencies. All eventual required package dependencies are resolved automatically during the first action running!

- For openVZ LVM storage: One (or more) lvm volume group(s) with free space dedicated for the openVZ VM storage

- One or more bridges configured for the virtual machines (e.g. br0, br1, etc.)

1. Create an openVZServer (Server object for the virtualization host).

In case the openQRM server is the physical system dedicated to be the virtualization host please use the Server Create wizard to create a new Server object using the openQRM server resource.

2. After creating the Server edit it and set Virtualization to OpenVZ Host.

In case a remote physical system, integrated via the Local-Server plugin, is the virtualization host the system integration already created an Server for this system. Simply edit it and set Virtualization to openVZ Host.

3. Create an openVZ storage (storage object for the virtual machine images).

Use the Storage Create wizard to create a new storage object using the same resource as selected for the openVZ Server and set the deployment type to openvz-deployment

The image volumes are created as LVM logical volumes on available LVM volume groups on the storage system.

4. Create an openVZ (localboot) virtual machine.

- Use the Server Create wizard to create a new Server object

- Create a new openVZ (localboot) VM resource in the 2. step

- Select the previously created openVZ Server object for creating the new openVZ (localboot) VM.

- Create a new openVZ VM image in the 3. step

- Select the previously created openVZ storage object for creating the new openVZ

VM image volume.

Creating a new volume automatically creates a new image object in openQRM.

Hint: For an initial installation of the image of the virtual machine the openVZ manager provides an easy way to upload ready-made openVZ operating system templates which can be then directly deployed to the openVZ volumes.

Please check http://wiki.openvz.org/Download/template/precreated

Starting the Server automatically combines the openVZ VM (resource object) and the openVZ VM volume (image object).

Stopping the Server will uncouple resource and image.

To enable further management functionalities of openQRM within the virtual machine's operating system please install the openqrm-local-vm-client in the VM.

Please refer to integrating existing, local-installed VM with Local-Server.

Through the integrated openVZ volume management existing, pre-installed Images can be duplicated with the Clone and Snapshot mechanism.

Clone

Cloning a volume of a openVZ VM image object results in a 1-to-1 copy of the source volume. A new image object for this volume copy is automatically created in openQRM.

Creating a clone depends on the actual volume size, hardware and network performance, etc..

All data from the origin volume is transferred/ copied to the source volume.

Snapshot

In case of openvz-deployment the underlying storage layer (LVM) provides the snapshot-functionality.

A snapshot results in a 1-to-1 copy-on-write (COW) volume which redirects all read calls to the origin and its write calls to the preserved storage space for the snapshot.

Creating a snapshot just takes a second. It does not involve data transfer nor does it use any actual storage space on the storage. That means a snapshot only saves changes to the origin and is a great way to efficiently save storage space for deployments.

Install and setup openQRM on a physical system or on a virtual machine.

1. Enable the following plugins: dns (optional), dhcpd (optional), tftpd, local-server, novnc, device-manager, network- manager, xen .

2. Configure the Xen boot-service

Please check the Xen help section how to use the Plugin-Manager "configure" option or the openQRM utility to apply a custom boot-service configuration.

Adapt the following configuration parameters according to your virtualization host bridge configuration

OPENQRM_PLUGIN_XEN_INTERNAL_BRIDGE OPENQRM_PLUGIN_XEN_EXTERNAL_BRIDGE

In case xen-bf-deployment (blockfile deployment) is used also:

OPENQRM_PLUGIN_XEN_FILE_BACKEND_DIRECTORIES

3. Select a physical server as the virtualization host (needs VT/virtualization support available and enabled in the system BIOS).

Please notice: This system dedicated to be the virtualization host can be the openQRM server system itself or it can be a remote physical server integrated via the Local-Server Plugin.

Please check Integrating existing, local-installed Systems with Local-Server. Please make sure this system meets the following requirements:

- Enable automatic packages installation capabilities so that it can fetch package dependencies. All eventual required package dependencies are resolved automatically during the first action running!

- For Xen LVM storage: One (or more) lvm volume group(s) with free space dedicated for the Xen VM storage

- For Xen blockfile storage: free space dedicated for the Xen VM storage , eventually using remote NAS/NFS storage space

- One or more bridges configured for the virtual machines (e.g. br0, br1, etc.)

1. Create a Xen Server (Server object for the virtualization host)

In case the openQRM server is the physical system dedicated to be the virtualization host please use the Server Create wizard to create a new Server object using the openQRM server resource.

2. After creating the Server edit it and set Virtualization to Xen Host

In case a remote physical system, integrated via the Local-Server plugin, is the virtualization host the system integration already created an Server for this system. Simply edit it and set Virtualization to Xen Host.

3. Create a Xen VM storage (storage object for the virtual machine images)

Use the Storage Create wizard to create a new storage object using the same resource as selected for the Xen Server and set the deployment type to either..

“ xen-lvm-deployment”

The image volumes are created as LVM logical volumes on available LVM volume groups on the storage system or..

“xen-bf-deployment”

The image volumes are created as blockfiles within configurable directories

Please check /usr/share/openqrm/plugins/xen/etc/openqrm-plugin-xen.conf for the configuration options

4. Create a Xen (localboot) virtual machine

- Use the Server Create wizard to create a new Server object

- Create a New Xen (localboot) VM resource in the 2. step

- Select the previously created Xen Server object for creating the new Xen (localboot) VM.

Please notice: For an initial installation of the image of the virtual machine you may want to configure the VM to boot from a prepared ISO image to start a manual or automatic operating system installation on first start up.

Hint: Easily create an automatic installation ISO image with the LinuxCOE plugin!

- The automatic installation ISO images of LinuxCOE will be automatically available to be selected on the virtualization host Server at /linuxcoe-iso

- Create a new Xen VM image in the 3. step

- Select the previously created Xen storage object for creating the new Xen VM image volume. Creating a new volume automatically creates a new image object in openQRM

Please notice: For an initial installation of the image of the virtual machine you may want to edit the image details to attach an automatic operating system installation on first start up.

Hint: Easily create an automatic installation profile that can be attached via openQRM Install-from-Template mechanism to a Xen VM image object via the following plugins: Cobbler, FAI, LinuxCOE, Opsi, Clonezilla

- Start the Server

Starting the Server automatically combines the Xen (localboot) VM (resource object) and the Xen VM volume (image object).

Stopping the Server will uncouple resource and image.

Hint: After the operating system installation on the Xen VM volume the VM is normally still set to boot from the ISO image. To reconfigure the VM to directly boot from its local installed virtual disk follow the steps below:

Stop the Server

Update the virtual machine via the plugins VM manager

Start the Server again

To enable further management functionalities of openQRM within the virtual machine's operating system please install the openqrm-local-vm-client in the VM. Please refer to integrating existing, local-installed VM with Local-Server.

Through the integrated Xen volume management existing, pre-installed images can be duplicated with the Clone and Snapshot mechanism. Clones and snapshots can be efficiently used for system deployments.

Clone

Cloning a volume of a Xen VM image object results in a 1-to-1 copy of the source volume. A new image object for this volume copy is automatically created in openQRM.

Creating a clone depends on the actual volume size, hardware and network performance, etc. All data from the origin volume are transferred/copied to the source volume.

Snapshot

In case of xen-lvm-deployment the underlying storage layer (LVM) provides the snapshot-functionality. A snapshot results in a 1-to-1 copy-on-write (COW) volume which redirects all read calls to the origin and its write calls to the preserved storage space for the snapshot. Creating a snapshot just takes a second.

It does not involve data transfer nor does it use any actual storage space on the storage. That means a snapshot only saves changes to the origin and is a great way to efficiently save storage space for deployments.

Howto

Virtualization with Xen and openQRM 5.2 on Debian Wheezy at:

http://www.openqrm-enterprise.com/resources/documentation-howtos/howtos/virtualization-with-xen-and-openqrm-51-on-debian-wheezy. html

1. Install and setup openQRM on a physical system or on a virtual machine

2. Install the latest VMware Vsphere SDK on the openQRM server system

3. Enable the following plugins: dns (optional), dhcpd (optional), tftpd, local-server, novnc, vmware.esx

4. Configure the VMware ESXi Boot-service

Please check the VMware ESXi help section how to use the Plugin-Manager "configure" option or the openQRM utility to apply a custom boot-service configuration.

Adapt the following configuration parameters according to your virtualization host bridge configuration

OPENQRM_VMWARE_ESX_INTERNAL_BRIDGE OPENQRM_VMWARE_ESX_EXTERNAL_BRIDGE_2 OPENQRM_VMWARE_ESX_EXTERNAL_BRIDGE_3 OPENQRM_VMWARE_ESX_EXTERNAL_BRIDGE_4 OPENQRM_VMWARE_ESX_EXTERNAL_BRIDGE_5

In case you are using openQRM Cloud with Xen (network-deployment) please also set:

OPENQRM_VMWARE_ESX_CLOUD_DATASTORE

OPENQRM_VMWARE_ESX_GUEST_ID OPENQRM_VMWARE_ESX_CLOUD_DEFAULT_VM_TYPE

5. Install one (or more) VMware ESXi server within the openQRM management network

6. Use the VMware ESXi Discovery to discover VMware-ESXi system(s)

After the discovery please integrate the VMware-ESXi system(s) by providing the administrative credentials.

Please notice: Integrating the VMware ESXi system automatically creates and pre-configures a VMware ESXi Host Server and Storage in openQRM.

7. Create a VMware ESXi (localboot) virtual machine

8. Use the Server Create wizard to create a new Server object

9. Create a new VMware ESXi (localboot) VM resource in the 2. step

10. Select the previously created VMware ESXi Server object for creating the new VMware ESXi (localboot) VM.

11. Please notice: For an initial installation of the image of the virtual machine you may want to configure the VM to boot from a prepared ISO image to start a manual or automatic operating system installation on first start up.

12. Hint: Easily create an automatic installation ISO image with the LinuxCOE plugin! The automatic installation ISO images of LinuxCOE will be automatically available via NFS at openQRM-server: /linuxcoe-iso. It is recommended to add this NFS export as ISO Datastore to the ESXi Host.

13. The VMware ESXi VM image is automatically created when creating the VMware ESXi VM in the 2. step. The Server Wizard will therefore skip the step 3 (Selecting/Creating a new Image).

14. Please notice: For an initial installation of the image of the virtual machine you may want to edit the image details to attach an automatic operating system Installation on first start up.

15. Hint: Easily create an automatic installation profiles which can be attached via openQRM Install-from- Template mechanism to a VMware ESXi VM image object via the following plugins: Cobbler, FAI, LinuxCOE, Opsi, Clonezilla

16. Start the Server

17. Starting the Server automatically combines the VMware ESXi (localboot) VM (resource object) and the VMware ESXi VM volume (image object).

18. Stopping the Server will uncouple resource and image.

19. Hint: After the operating system installation on the VMware ESXi VM volume the VM is normally still set to boot from the ISO Image. To reconfigure the VM to directly boot from its local installed virtual disk follow the steps below:

Stop the Server

Update the virtual machine via the VM manager

Start the Server again

23. To enable further management functionalities of openQRM within the virtual machine's operating system please install the openqrm-local-vm-client in the VM. Please refer to Integrating existing, local-installed VM with Local-Server.

1. Copy (scp) the "openqrm-local-server" utility to an existing, local-installed server in your network

scp /usr/share/openqrm/plugins/local-server/bin/openqrm-local-server [ip-address-of-existing-server]:/tmp/

Login to the remote system via ssh and execute the "openqrm-local-server" utility on the remote system:

ssh [ip-address-of-existing-server]

/tmp/openqrm-local-server integrate -u openqrm -p openqrm -q [ip-address-of-openQRM-server] -i eth0 [-s http/https]

The system now appears in the openQRM server as a new resource . It should now be set to "network-boot" in its BIOS to allow dynamic assign- and deployment.

The resource can now be used to e.g. create a new "storage-server" within openQRM.

After setting the system to "network-boot" in its BIOS it also can be used to deploy server-images from different types.

To remove a system from openQRM integrated via the local-server plugin run the "openqrm-local-server" utility again on the remote system.

/tmp/openqrm-local-server remove -u openqrm -p openqrm -q [ip-address-of-openQRM-server] [-s http/https]

For local-installed virtual machines (e.g. KVM (localboot), Xen (localboot), LXC (localboot), openVZ (localboot)) which have access to the openQRM network there is an "openqrm-local-vm-client" available.

This "openqrm-local-vm-client" just starts and stops the plugin-boot-services to allow further management functionality. Monitoring and openQRM actions are still running on behalf of the VM host.

2. Download/Copy the "openqrm-local-vm-client" from the Local-Server help section (Local VMs) to a local installed VM

scp openqrm-local-vm-client [ip-address-of-existing-server]:/tmp/

3. Execute the "openqrm-local-vm-client" on the VM

/tmp/openqrm-local-vm-client The "openqrm-local-vm-client" fully automatically configures itself.

1. Install a Cobbler install server on a dedicated system (physical server or VM)

Notice: Configure the Cobbler install service to not run the Dhcpd service on the Cobbler system itself.

The openQRM dhcpd service provided by the Dhcpd-Plugin will be used instead.

1. Additionally install the screen package on the Cobbler server.

2. Integrate a Cobbler install server into openQRM via the "local-server" plugin. Please check integrating existing, local-installed Systems with Local-Server.

3. Create a new storage server from the type "cobbler-deployment" using the Cobbler systems resource.

4. Add Cobbler snippets (kickstart-templates) to the Cobbler install server via the Cobbler Web UI and combine them to Installation profiles.

Hint: Add the Cobbler snippet openqrm_client_auto_install.snippets from the Cobbler plugins help section to your Cobbler profiles to automatically install the openQRM client on the provisioned systems.

VM images for local-deployment (e.g. Citrix (localboot), KVM (localboot), Xen (localboot)) can now be set to "install-from-template" via Cobbler in the image Edit section.

Local-deployment Images for physical system are created through the Cobbler plugins Image Admin section. In the image Edit section they can be set to "install-from-template" via Cobbler in the same way as virtual machines.

Starting a Server with a Local-deployment Image configured via an Install-from-Template Cobbler installation profile automatically transfers the system to the Cobbler server for the initial OS installation (PXE/Network-boot).

While the automatic OS installation is running on behalf of the Cobbler servers responsibility openQRM takes back the control of the system and prepares it for local-booting.

After rebooting from the automatic installation the system is fully integrated into openQRM.

Hint: The Install-from-Template mechanism with Cobbler can be also used in the openQRM Cloud!

Just make sure the image masters provided in the openQRM Cloud are configured with Install-from-Template.

1. Enable the following plugins: dns (optional), dhcpd, tftpd, device-manager, clonezilla

2. Create a new storage server from the type "clonezilla-deployment"

Please notice: This system dedicated to be the Clonezilla template storage can be the openQRM server system itself or it can be a remote physical server integrated via the

Local-Server Plugin.

Please check integrating existing, local-installed systems with Local-Server.

Please make sure this system meets the following requirements:

- Enable automatic packages installation capabilities so that it can fetch package dependencies. All eventual required package dependencies are resolved automatically during the first action running!

- One or more lvm volume group(s) with free space dedicated for the Clonezilla Templates

- Grab a local-installed system (populating the template)

- Network-boot (PXE) a physical system or a virtual machine into the idle state in openQRM (network- boot and automatic integration)

- In the “Template Admin” use the “Deploy” button to activate the “grab” phase

- In the second step select the “idle” resource (the system with the local-installed OS on its hard disk)

- The system now reboots into the “grab” phase, starts clonezilla, mounts the template storage and transfers its disk content to the Clonezilla template.

Hint: The “Drain” action will empty the specific selected template so it can be re-used for the “grab” phase.

1. Deployment of Clonezilla templates - Use the “Image Admin” to create a new Clonezilla volume - Creating a new volume automatically creates a new image object in openQRM

Clonezilla images can now be set to "install-from-template" via Clonezilla in the image “Edit” section.

Edit the created Clonezilla image and select “Automatic Clone from Template” for the automatic installation.

Then select the Clonezilla storage server and the template previously created and populated.

Starting a Server with a Clonezilla image configured via an Install-from-Template

Clonezilla template automatically “transfers” the system to Clonezilla for the initial OS installation (PXE/Network-boot).

While the automatic OS installation is running on behalf of Clonezilla openQRM takes back the control of the system and prepares it for local-booting. After rebooting from the automatic installation the system is fully integrated into openQRM.

Hint: The “Install-from-Template” mechanism with Clonezilla can be also used in the openQRM Cloud! Just make sure the image masters provided in the openQRM Cloud are configured with “Install-from-Template”.

Install and setup openQRM on a physical system or on a virtual machine

1. Enable the following plugins: dns (optional), dhcpd, tftpd, local-server, fai 2. Install a FAI install server on a dedicated system (physical server or VM).

Notice: Configure the FAI install service to not run the “dhcpd” service on the FAI system itself. The openQRM “dhcpd” service provided by the Dhcpd-Plugin is used instead.

Additionally install the “screen” package on the FAI server.

Integrate a FAI install server into openQRM via the "local-server" plugin.

Please check “Integrating existing, local-installed systems with Local-Server”

3. Create a new storage server from the type "fai-deployment" using the FAI systems resource.

Add FAI “snippets” (preseed-templates) to the FAI install server and combine them to installation profiles.

Hint: Add the FAI snippet openqrm_client_fai_auto_install.snippets from the FAI plugin help section to your FAI profiles to automatically install the openQRM client on the provisioned systems.

VM images for local-deployment (e.g. Citrix (localboot), KVM (localboot), Xen (localboot)) can now be set to "install-from-template" via FAI in the image “Edit” section.

Local-deployment images for physical system are created through the FAI plugins “Image Admin” section. In the image “Edit” section they can be set to "install-from-template" via FAI in the same way as virtual machines.

Starting a Server with a Local-deployment Image configured via an “Install-from-Template”

FAI installation profile automatically “transfers” the system to the FAI Server for the initial OS installation (PXE/Network- boot). While the automatic OS installation is running on behalf of the FAI servers responsibility openQRM takes back the control of the system and prepares it for local-booting.

After rebooting from the automatic installation the system is fully integrated into openQRM.

Hint: The “Install-from-Template” mechanism with FAI can be also used in the openQRM Cloud! Just make sure the image masters provided in the openQRM Cloud are configured with “Install-from-Template”.

1. Enable the following plugins: dns (optional), dhcpd, tftpd, linuxcoe

Notice: In opposite to Cobbler, FAI and Opsi the LinuxCOE automatic install server is automatically provided by the LinuxCOE plugin. After enabling and starting the LinuxCOE plugin the LinuxCOE automatic install server is automatically configured and its Web UI embedded into the openQRM LinuxCOE plugin.

There is no need for a dedicated system for the install server.

2. Create a new storage server from the type "LinuxCOE-deployment" using the openQRM server systems resource

3. Create LinuxCOE automatic installation profiles via the LinuxCOE Web UI (LinuxCOE plugin - create templates)

Provide a description for each created template via the LinuxCOE template manager.

VM images for local-deployment (e.g. Citrix (localboot), KVM (localboot), Xen (localboot)) can now be set to "install-from-template" via LinuxCOE in the Image “Edit” section.

Local-deployment images for physical system are created through the LinuxCOE plugins “Image Manager” section. In the image “Edit” section they can be set to "install-from-template" via LinuxCOE in the same way as virtual machines.

Starting a Server with a Local-deployment image configured via an “Install-from-Template” LinuxCOE installation profile automatically “transfers” the system to the LinuxCOE server for the initial OS installation (PXE/Network-boot).

While the automatic OS installation is running on behalf of the LinuxCOE servers responsibility openQRM takes back the control of the system and prepares it for local-booting. After rebooting from the automatic installation the system is fully integrated into openQRM.

Hint: The “Install-from-Template” mechanism with LinuxCOE can be also used in the openQRM Cloud! Just make sure the image masters provided in the openQRM Cloud are configured with “Install-from-Template”.

Hint: After creating an installation template the resulting ISO image can be burned on a CD to automatically install a physical server (the initial goal of the LinuxCOE Project).

Hint: In openQRM the LinuxCOE ISO images are also automatically available on virtualization host from the type "local-deployment VMs" (e.g. "KVM (localboot)" and "Xen (localboot)") in the /linuxcoe-iso directory. Simply configure a virtual machine to boot from such a LinuxCOE ISO image for an fully automatic VM installation.

Please notice that after a successful installation the VM will most likely try to boot from the ISO image again after the automatic install procedure finished! Please stop the VMs Server after the initial automatic installation, then re-configure the virtual machine to boot from "local" and start the Server again.

1. Enable the following plugins: dns (optional), dhcpd, tftpd, local-server, opsi

2. Install a Opsi install server on a dedicated system (physical server or VM).

Notice: Configure the Opsi install service to not run the “dhcpd” service on the Opsi system itself. The openQRM “dhcpd” service provided by the Dhcpd-Plugin will be used instead.

Additionally install the “screen” package on the Opsi server.

Integrate a Opsi install server into openQRM via the "local-server" plugin

Please check “Integrating existing, local-installed systems with Local-Server”

3. Create a new storage server from the type "opsi-deployment" using the Opsi systems resource

Create and configure automatic Windows installations and optional Windows application packages via the Opsi Web UI.

VM images for local-deployment (e.g. Citrix (localboot), KVM (localboot), Xen (localboot)) can now be set to "install-from-template" via Opsi in the image Edit section.

Local-deployment images for physical system are created through the Opsi plugins “Image Manager” section.

In the image “Edit” section they can be set to "install-from-template" via Opsi in the same way as virtual machines.

Starting a Server with a Local-deployment image configured via an “Install-from-Template” Opsi installation Profile automatically “transfers” the system to the Opsi server for the initial OS installation (PXE/Network- boot).

While the automatic OS installation is running on behalf of the Opsi servers responsibility openQRM takes back the control of the system and prepares it for local-booting.

After rebooting from the automatic installation the system is fully integrated into openQRM via the openQRM client for Windows.

Hint: The “Install-from-Template” mechanism with Opsi can be also used in the openQRM Cloud! Just make sure the image masters provided in the openQRM Cloud are configured with “Install-from-Template”.

Network-deployment in openQRM is a combination of:

a network-booted physical server

or

a virtualization plugin (for network-deployment)

and a storage plugin (also for network-deployment).

In case of network-deployment of a physical server the physical system itself is available as “idle” resource after network-booting it via PXE.

In case of virtual machine network-deployment the virtualization plugin (e.g. Citrix, KVM, VMware-ESX, Xen) provides JUST the “Resource” object of a Server!

The storage plugin (e.g. Aoe-Storage, iSCSI-Storage, NFS-Storage, LVM-Storage, Tempfs-Storage etc.) provides JUST the “Image” (and its Storage) object of a Server!

A physical network-booted system can be deployed with ANY storage plugin (for network-deployment)

A network-booted virtual machine provided by a virtualization plugin (for network-deployment) can be deployed with ANY storage plugin (for network-deployment).

Install and setup openQRM on a physical system or on a virtual machine

1. Install the Citrix “xe” command line utility on the openQRM server system to /usr/bin/xe!

(e.g. use “scp” to copy it from your Citrix XenServer system) Enable the following plugins: dns (optional), dhcpd, tftpd, local-server, novnc, citrix

2. Configure the Citrix Boot-service

Please check the Citrix help section how to use the Plugin-Manager "configure" option or the “openqrm” utility to apply a custom boot-service configuration.

Adapt the following configuration parameters according to your virtualization host bridge configuration.

CITRIX_MANAGEMENT_INTERFACE

CITRIX_EXTERNAL_INTERFACE

CITRIX_DEFAULT_VM_TEMPLATE

In case you are using openQRM Cloud with Xen (network-deployment) please also set:

CITRIX_DEFAULT_SR_UUID

3. Install one (or more) Citrix XenServer within the openQRM management network.

Hint: Enable SSH login on the Citrix XenServer

The openQRM NoVNC plugin provides access to the Citrix VM console (requires SSH Login enabled on the Citrix XenServer configuration). Use the Citrix discovery to discover Citrix XenServer system(s). After the discovery please integrate the Citrix system(s) by providing the administrative credentials.

Please notice: Integrating the Citrix XenServer system automatically creates and pre-configures a Citrix host Server in openQRM!

4. Create a “Citrix” virtual machine

5. Use the “Server Create” wizard to create a new Server object

6. Create a new Citrix VM resource in the 2. step

- Select the previously created “Citrix” host Server object for creating the new Citrix VM.

The new created Citrix VM automatically performs a network-boot and is available as “idle” Citrix VM resource soon. Then select the new created resource for the Server.

- Creating an image for network-deployment

In the 3. step of the Server wizard please create a new Image using one of the storage plugins for network- deployment.

E.g. AOE-Storage, iSCSI-Storage, NFS-Storage, LVM-Storage, TMPFS-Storage, ZFS-Storage

Hint : The creation of the image for network-deployment depends on the specific storage plugin being used. Please refer to the section about the storage plugin you have chosen for network-deployment in this document.

After creating the new network-deployment image please select it in the Server wizard.

- Save the Server

Starting the Server automatically combines the Citrix VM (resource object) and the image object (volume) abstracted via the specific storage plugin. (stopping the Server will “uncouple” resource and image)

To enable further management functionalities of openQRM “within” the virtual machine's operating system the “openqrm-client” is automatically installed in the VM during the deployment phase.

There is no need to further integrate it by e.g.the “Local-Server” plugin!

1. Install and setup openQRM on a physical system or on a virtual machine

2. Enable the following plugins: dns (optional), dhcpd, tftpd, local-server, novnc, device-manager, network- manager, kvm

3. Configure the KVM Boot-service

Please check the KVM help section how to use the Plugin-Manager "configure" option or the “openqrm” utility to apply a custom boot-service configuration.

Adapt the following configuration parameters according to your virtualization host bridge configuration. This network-configuration is only used by the openQRM Cloud to auto-create VMs.

OPENQRM_PLUGIN_KVM_BRIDGE_NET1

OPENQRM_PLUGIN_KVM_BRIDGE_NET2

OPENQRM_PLUGIN_KVM_BRIDGE_NET3

OPENQRM_PLUGIN_KVM_BRIDGE_NET4

OPENQRM_PLUGIN_KVM_BRIDGE_NET5

4. Select a physical server as the virtualization host (needs VT/virtualization support available and enabled in the system BIOS).

Please notice: This system dedicated to be the virtualization host can be the openQRM server system itself or it can be a “remote” physical server integrated via the “Local-Server Plugin”.

Please check “Integrating existing, local-installed systems with Local-Server”. Please make sure this system meets the following requirements:

- Enable automatic packages installation capabilities so that it can fetch package dependencies. All eventual required package dependencies are resolved automatically during the first action running!

- One or more bridges configured for the virtual machines (e.g. br0, br1, etc.)

1. Create a “KVM” Server (Server object for the virtualization host)

In case the openQRM server is the physical system dedicated to be the virtualization host please use the “Server Create” wizard to create a new Server object using the openQRM server resource.

2. After creating the Server edit it and set “Virtualization” to “KVM Host”

In case a “remote” physical system, integrated via the “Local-Server” plugin, is the virtualization host the system integration already created a Server for this system. Simply edit it and set.

“Virtualization” to “KVM Host”.

3. Create a “KVM” virtual machine

- Use the “Server Create” wizard to create a new Server object

- Create a new KVM VM resource in the 2. step - Select the previously created “KVM” Server object for creating the new KVM VM.

The new created KVM VM automatically performs a network-boot and is available as “idle” KVM VM resource soon. Then select the new created resource for the Server.

- Creating an image for network-deployment

In the 3. step of the Server wizard please create a new image using one of the storage plugins for network- deployment. E.g. AOE-Storage, iSCSI-Storage, NFS-Storage, LVM-Storage, TMPFS-Storage, ZFS-Storage.

Hint: The creation of the image for network-deployment depends on the specific storage plugin being used. Please refer to the section about the storage plugin you have chosen for network-deployment in this document.

- After creating the new network-deployment Image please select it in the Server wizard.

- Start the Server

Starting the Server automatically combines the KVM VM (resource object) and the image object (volume) abstracted via the specific storage plugin. Stopping the Server will “uncouple” resource and image.

To enable further management functionalities of openQRM “within” the virtual machine's operating system the “openqrm-client” is automatically installed in the VM during the deployment phase. There is no need to further integrate it by e.g. the “Local-Server” plugin.

1. Install and setup openQRM on a physical system or on a virtual machine

2. Enable the following plugins: dns (optional), dhcpd, tftpd, local-server, novnc, libvirt

3. Configure the Libvirt Boot-service

Please check the Libvirt help section how to use the Plugin-Manager "configure" option or the “openqrm” utility to apply a custom boot-service configuration.

Adapt the following configuration parameters according to your virtualization host bridge configuration. This network-configuration is only used by the openQRM Cloud to auto-create VMs.

OPENQRM_PLUGIN_LIBVIRT_BRIDGE_NET1

OPENQRM_PLUGIN_LIBVIRT_BRIDGE_NET2

OPENQRM_PLUGIN_LIBVIRT_BRIDGE_NET3

OPENQRM_PLUGIN_LIBVIRT_BRIDGE_NET4

OPENQRM_PLUGIN_LIBVIRT_BRIDGE_NET5 OPENQRM_PLUGIN_LIBVIRT_PRIMARY_NIC_TYPE OPENQRM_PLUGIN_LIBVIRT_ADDITIONAL_NIC_TYPE OPENQRM_PLUGIN_LIBVIRT_VM_DEFAULT_VNCPASSWORD

Select a physical server as the virtualization host

(needs VT/virtualization support available and enabled in the system BIOS)

Please notice: This system dedicated to be the virtualization host can be the openQRM server system itself or it can be a “remote” physical server integrated via the “Local-Server Plugin”.

Please check “Integrating existing, local-installed systems with Local-Server”.

Please make sure this system meets the following requirements:

- Enable automatic packages installation capabilities so that it can fetch package dependencies. All eventual required package dependencies are resolved automatically during the first action running!

- One or more bridges configured for the virtual machines (e.g. br0, br1, etc.).

Create a “Libvirt” Server (Server object for the virtualization host)

In case the openQRM server is the physical system dedicated to be the virtualization host please use the “Server Create” wizard to create a new Server object using the openQRM server resource.

After creating the Server edit it and set “Virtualization” to “Libvirt Host” In case a “remote” physical system, integrated via the “Local-Server” plugin, is the virtualization host the system integration already created a Server for this system.

Simply edit it and set “Virtualization” to “Libvirt Host” Create a “Libvirt” (network boot) virtual machine

- Use the “Server Create” wizard to create a new Server object - Create a new Libvirt VM resource in the 2. step - Select the previously created “Libvirt” Server object for creating the new Libvirt VM.

The new created Libvirt VM automatically performs a network-boot and is available as “idle” Libvirt VM resource soon.

Then select the new created resource for the Server.

- Creating an image for network-deployment

In the 3. step of the Server wizard please create a new image using one of the storage plugins for network- deployment. E.g. AOE-Storage, iSCSI-Storage, NFS-Storage, LVM-Storage, TMPFS-Storage, ZFS-Storage.

Hint: The creation of the image for network-deployment depends on the specific storage plugin being used. Please refer to the section about the storage plugin you have chosen for network-deployment in this document.

- After creating the new network-deployment Image please select it in the Server wizard.

- Start the Server

Starting the Server automatically combines the Libvirt VM (resource object) and the image object (volume) abstracted via the specific storage plugin.

Stopping the Server will “uncouple” resource and image.

To enable further management functionalities of openQRM “within” the virtual machine's operating system the “openqrm-client” is automatically installed in the VM during the deployment phase. There is no need to further integrate it by e.g. the “Local-Server” plugin.

1. Install and setup openQRM on a physical system or on a virtual machine

2. Enable the following plugins: dns (optional), dhcpd, tftpd, local-server, novnc, device-manager, network- manager, xen

3. Configure the Xen Boot-service

Please check the Xen help section how to use the Plugin-Manager "configure" option or the “openqrm utility to apply a custom boot-service configuration.

Adapt the following configuration parameters according to your virtualization host bridge configuration:

OPENQRM_PLUGIN_XEN_INTERNAL_BRIDGE OPENQRM_PLUGIN_XEN_EXTERNAL_BRIDGE

4. Select a physical server as the virtualization host (needs VT/virtualization support available and enabled in the system BIOS)

Please notice: This system dedicated to be the virtualization host can be the openQRM server system itself or it can be a “remote” physical server integrated via the “Local-Server Plugin”.

Please check “Integrating existing, local-installed Systems with Local-Server”.

Please make sure this system meets the following requirements:

- Enable automatic packages installation capabilities so that it can fetch package dependencies. All eventual required package dependencies are resolved automatically during the first action running!

- One or more bridges configured for the virtual machines (e.g. br0, br1, etc.)

1. Create a “Xen” Server (Server object for the virtualization host).

In case the openQRM server is the physical system dedicated to be the virtualization host please use the “Server Create” wizard to create a new Server object using the openQRM server resource.

2. After creating the Server edit it and set “Virtualization” to “Xen Host”

In case a “remote” physical system, integrated via the “Local-Server” plugin, is the virtualization host the system integration already created a Server for this system.

Simply edit it and set “Virtualization” to “Xen Host”.

3. Create a “Xen” virtual machine

- Use the “Server Create” wizard to create a new Server object .

- Create a new Xen VM resource in the 2. step.

- Select the previously created Xen Server object for creating the new Xen VM.

- The new created Xen VM automatically performs a network-boot and is available as “idle” Xen VM resource soon. Then select the new created resource for the Server.

- Creating an image for network-deployment In the 3. step of the Server wizard please create a new image using one of the storage plugins for network- deployment. E.g. AOE-Storage, iSCSI-Storage, NFS-Storage, LVM-Storage, TMPFS-Storage, ZFS-Storage

Hint: The creation of the image for network-deployment depends on the specific storage plugin being used. Please refer to the section about the storage plugin you have chosen for network-deployment in this document.

- After creating the new network-deployment Image please select it in the Server wizard.

- Start the Server

Starting the Server automatically combines the Xen VM (resource object) and the image object (volume) abstracted via the specific storage plugin.

Stopping the Server will “uncouple” resource and image.

To enable further management functionalities of openQRM “within” the virtual machine's operating system the “openqrm-client” is automatically installed in the VM during the deployment phase.

There is no need to further integrate it by e.g. the “Local-Server” plugin!

1. Install and setup openQRM on a physical system or on a virtual machine .

2. Install the latest VMware Vsphere SDK on the openQRM server system.

3. Enable the following plugins: dns (optional), dhcpd, tftpd, local-server, novnc, vmware.esx

4. Configure the VMware ESXi Boot-service.

Please check the VMware ESXi help section how to use the Plugin-Manager "configure" option or the “openqrm” utility to apply a custom boot-service configuration.

Adapt the following configuration parameters according to your virtualization host bridge configuration

OPENQRM_VMWARE_ESX_INTERNAL_BRIDGE OPENQRM_VMWARE_ESX_EXTERNAL_BRIDGE_2 OPENQRM_VMWARE_ESX_EXTERNAL_BRIDGE_3 OPENQRM_VMWARE_ESX_EXTERNAL_BRIDGE_4 OPENQRM_VMWARE_ESX_EXTERNAL_BRIDGE_5

In case you are using openQRM Cloud with Xen (network-deployment) please also set:

OPENQRM_VMWARE_ESX_CLOUD_DATASTORE

OPENQRM_VMWARE_ESX_GUEST_ID OPENQRM_VMWARE_ESX_CLOUD_DEFAULT_VM_TYPE

5. Install one (or more) VMware ESXi server within the openQRM management network

6. Use the VMware ESXi Discovery to discover VMware-ESXi system(s).

After the discovery please integrate the VMware-ESXi system(s) by providing the administrative credentials.

Please notice: Integrating the VMware ESXi system automatically creates and pre-configures a VMware ESXi host Server in openQRM.

7. Create a “VMware ESXi” virtual machine

- Use the “Server Create” wizard to create a new Server object

- Create a new VMware ESX VM resource in the 2. step - Select the previously created “VMware ESX” Server object for creating the new VMware ESXi VM.

The new created VMware ESXi VM automatically performs a network-boot and is available as “idle” VMware ESXi VM Resource soon. Then select the new created resource for the Server.

- Creating an image for network-deployment.

In the 3. step of the Server wizard please create a new image using one of the storage plugins for network- deployment. E.g. AOE-Storage, iSCSI-Storage, NFS-Storage, LVM-Storage, TMPFS-Storage, ZFS-Storage.

Hint: The creation of the image for network-deployment depends on the specific storage plugin being used. Please refer to the section about the storage plugin you have chosen for network-deployment in this document.

- After creating the new network-deployment Image please select it in the Server wizard.

- Save the Server

- Start the Server

Starting the Server automatically combines the VMware ESXi VM (resource object) and the image object (volume) abstracted via the specific storage plugin.

Stopping the Server will “uncouple” resource and image.

To enable further management functionalities of openQRM “within” the virtual machine's operating system the “openqrm-client” is automatically installed in the VM during the deployment phase.

There is no need to further integrate it by e.g. the “Local-Server” plugin.

1. Install and setup openQRM on a physical system or on a virtual machine

2. Enable the following plugins: dns (optional), dhcpd, tftpd, aoe-storage

3. Select a physical server or virtual machine for the AOE storage

Please notice: This system dedicated to be the AOE storage can be the openQRM server system itself or it can be a “remote” physical server integrated via the “Local-Server Plugin”.

Please check “Integrating existing, local-installed systems with Local-Server”.

Please make sure this system meets the following requirements:

- Enable automatic packages installation capabilities so that it can fetch package dependencies. All eventual required package dependencies are resolved automatically during the first action running!

1. Create an AOE storage object in openQRM

- go to Base - Components - Storage - Create

- provide a name for the storage object

- select “aoe-deployment” as deployment type

- select the resource dedicated for the AOE storage

1. Create a new AOE storage volume

- goto Base - Components - Storage - List, click on “manage”

- create a new AOE volume

Creating a new volume automatically creates a new image object in openQRM .